We have developed a simple ML model using teachable machine and trained it with the most common and initial symptoms of COVID-19. Through this model one is able to get a check with the symptoms of COVID-19. Based on the severity your details can be shared with nearby medical facilities and confirm an appointment with a doctor.

How it works?

First user has to enter his name & contact details.

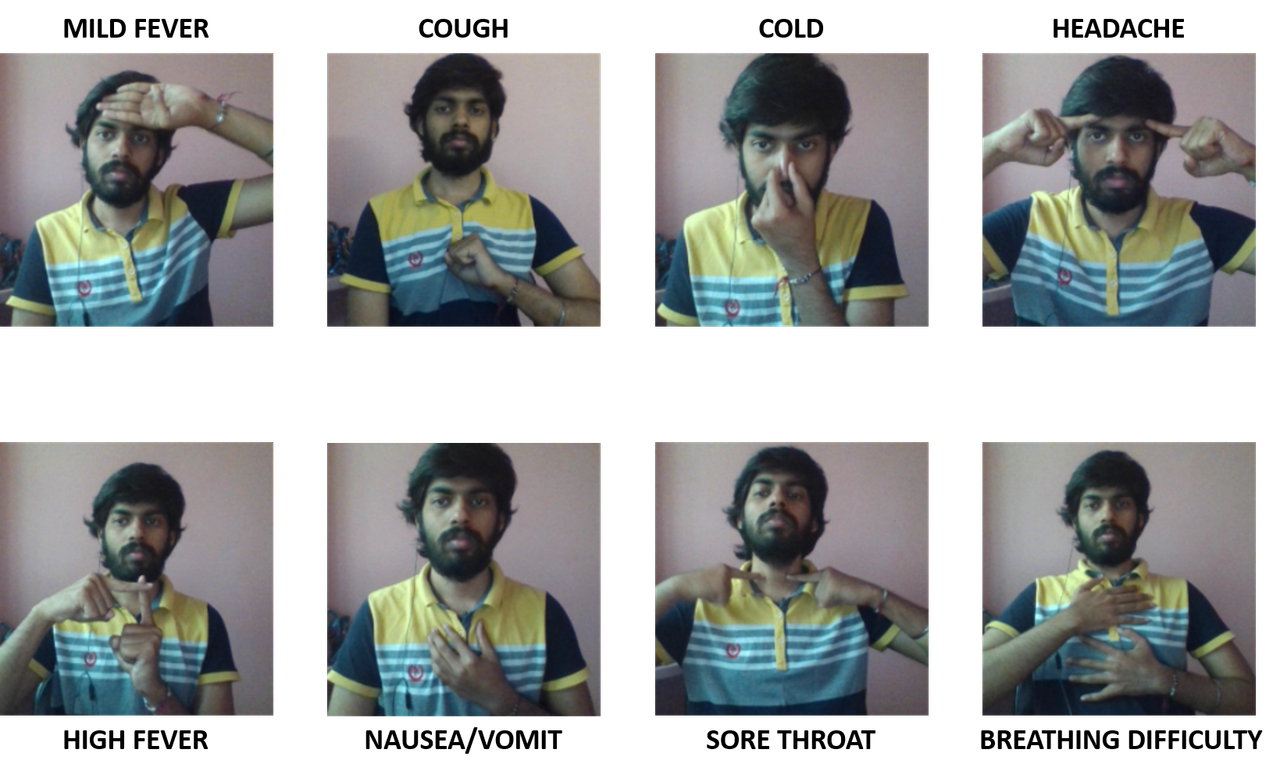

Then we have a simple gestures assigned for the basic symptoms like,

Once the person uses the AI and his symptoms get recorded the report is sent to his/her mobile via SMS and also stored in a CSV file for record.

If he/she would like to share this details with nearby medical facilities, they will have to provide PINCODE of their residential location so that it would be easy to make an appointment with a doctor at nearby hospital

How the details are being shared through SMS?

The recorded details are a simple string format List. We are using an IoT extension from PictoBlox under which we are using HTTP API Request(s).

We created an applet in IFTTT & used WEBHOOK services to send a copy of a medical record via SMS to the contact number mentioned by the person.

How is the Doctor & Hospital location being confirmed and Shared?

For this we have created a simple database in Excel and saved it as a CSV file. The file is accessed in pictoblox using NLP(Natural Language Processing). The file consists of PINCODE, DOCTOR & GPS location of Hospital. When a person provides the PINCODE we first search for available doctors who are available at a specific time then the patient details and the AI generated file is shared. Once the confirmation is received from the doctor then the Details & location are sent to the person via SMS which is done by IFTTT & WEB HOOK.

The main idea is to help speech disabled people with the features of AI, ML & IoT services which can be helpful in providing better medical attention.